With fast advances in technology, deepfakes are becoming more convincing and widespread. Consequently, these AI-generated videos, images and audio can deceive even the most tech-savvy people. From fake news to financial scams, deepfakes have serious implications for society. But what exactly are they, and how can you protect yourself? So, let’s dive into this world of fakery and uncover its risks.

What are deepfakes?

They are synthetic media created using deep learning and artificial intelligence (AI). By combining real footage with AI, deepfake software can produce very convincing fake videos, audio, or images. These tools manipulate existing media to make it appear as though someone said or did something they didn’t. While deepfake technology was initially developed for fun, it’s now being used in harmful ways, including scams, fraud, and disinformation.

How do deepfakes work?

They rely on generative adversarial networks (GANs). This machine-learning model pits two AI systems against each other: one creates the fake media, while the other tries to detect it. Over time, the system becomes better at producing believable results. Deepfake software can also use facial recognition algorithms to map someone’s facial movements, making the fake content even more realistic.

The dangers of deepfakes

The rise of deepfakes has sparked concern worldwide, especially as the technology becomes more accessible. Here are some major risks associated with deepfakes:

- Disinformation and fake news: Deepfakes can be used to spread false information quickly. Political figures have been targeted, with fake videos showing them making false claims or engaging in inappropriate behaviour. Consequently, this kind of manipulation can sway public opinion and cause serious damage.

- Fraud and scams: Deepfake technology is being used to impersonate individuals in scam calls, particularly targeting the elderly. With convincing audio and video, scammers can trick people into sending money or revealing sensitive information.

- Identity theft: Cybercriminals use deepfakes to steal people’s identities, posing as someone else online. They can create fake social media profiles or videos, fooling friends, family, and even financial institutions.

- Inappropriate content: Deepfakes are often used to create explicit content, putting innocent people’s faces onto fake pornography without their consent. This has devastating consequences for the victims, both personally and professionally.

Examples of deepfakes

Deepfakes have hit the headlines due to their use in high-profile incidents. Some examples include:

- Barack Obama: A fake video circulated showing the former U.S. president making controversial statements. The video looked real, but it was entirely fabricated using deepfake technology.

- Tom Cruise on TikTok: An account using deepfakes of the Hollywood star went viral, showing realistic videos of him performing stunts or magic tricks. Many viewers believed it was genuinely Cruise until it was revealed to be a fake.

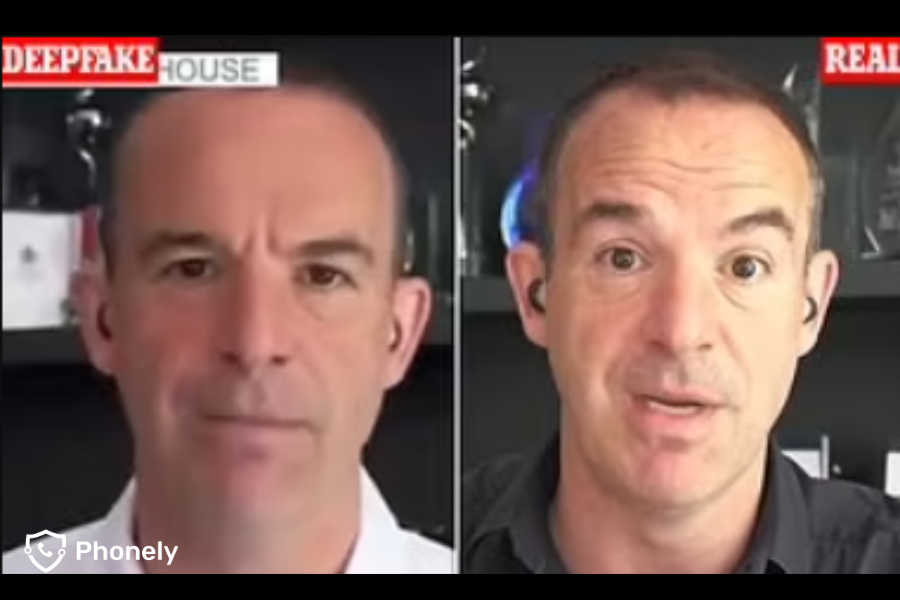

- Martin Lewis deepfake scam: In July 2023, a deepfake video of finance expert Martin Lewis was used in a scam promoting a fake investment scheme. This shows the potential for deepfakes to harm reputations and trick people into parting with their money.

Audio deepfake scams

While video deepfakes are well-known, audio deepfakes have also started to make headlines. These AI-generated voice clones are used by scammers to impersonate individuals in phone calls, voicemails, or audio messages. Here are some notable examples:

- UK energy firm CEO scam (2019): Scammers used an audio deepfake of the CEO’s voice to fraudulently transfer €220,000 to an external account. The criminals tricked the company by perfectly mimicking the CEO’s German accent and manner of speech.

- New Hampshire voter suppression (2024): Deepfake audio of President Joe Biden urged voters not to cast ballots in the New Hampshire primary. The robocall was an attempt at voter suppression using AI-generated audio.

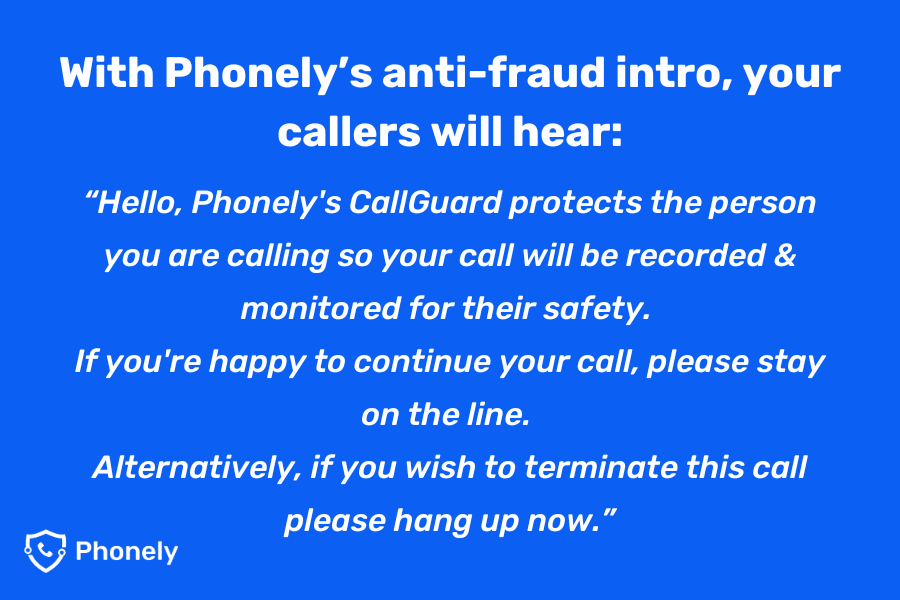

How Phonely’s CallGuard protects against deepfake audio scams

At Phonely, we take the threat of deepfake audio seriously. That’s why we’ve developed CallGuard, our advanced scam prevention technology. CallGuard uses sophisticated AI to detect and notify you of criminal language patterns and other telltale signs of a scam caller. As your call is recorded you will be able to refer back to your conversation and use it for peace of mind or evidence.

When your number is dialled, before the call is connected, CallGuard plays an anti-fraud introduction, to deter callers and confuse machines. It’s at this point, before your phone has even rang, that criminals will think twice and hang up.

Legal & ethical issues surrounding deepfakes

As deepfakes become more dangerous, governments are introducing laws to combat them. However, the legal landscape is still evolving. Here are some key considerations:

- Is deepfake illegal? Not all deepfakes are illegal, but when used for criminal purposes like fraud or defamation, they can be. Creating or distributing explicit deepfakes without consent is illegal in the UK and other countries.

- Is downloading deepfakes illegal? Downloading explicit deepfakes or those used for harmful purposes is often illegal. However, legal consequences depend on the intent and nature of the deepfake.

- Are deepfakes illegal in the UK? In the UK, explicit deepfakes without consent are considered criminal offences under the Digital Economy Act. The government is working to tighten laws around deepfake creation and distribution.

- When did deepfakes start? Deepfakes first appeared in 2017, gaining popularity on social media platforms. Since then, the technology has rapidly improved, making it harder to detect and more dangerous.

How to report deepfakes in the UK

If you believe you have encountered a deepfake, it’s important to take action, especially if it has been used for malicious or fraudulent purposes. Here’s how you can report it in the UK:

- Fraudulent use: If a deepfake is being used in a scam or to defraud you or someone you know, report it to Action Fraud, the UK’s national reporting centre for fraud and cybercrime. You can file a report online at Action Fraud or call their helpline.

- Harmful or offensive deepfakes: If the deepfake content is being used to harass, defame, or cause emotional distress, you can report this to your local police force. Many UK police departments, such as the Greater Manchester Police and the Metropolitan Police, allow you to report crimes online, including deepfakes that may involve harassment, blackmail, or identity theft. Visit their websites to make a report.

- Intimate image abuse: If a deepfake involves non-consensual explicit content, often referred to as “deepfake pornography,” it is illegal under UK law. You can report this type of abuse directly to the police, as it is a serious crime under the Digital Economy Act.

- Terrorism or hate crime: Deepfakes that promote terrorism, violence, or incite hatred can be reported via the iREPORTit app or directly through the GOV.UK portal for reporting suspected terrorist content.

By knowing where and how to report deepfakes, you can help mitigate their harmful effects and support efforts to prevent further misuse of this technology.

FAQs

What are deepfakes?

They are AI-generated media that can create highly convincing but fake videos, images, or audio of individuals. They are typically created using deep learning algorithms like GANs.

How can I protect myself from deepfake scams?

Be cautious with unsolicited requests for money or sensitive information. Use scam protection tools like Phonely’s CallGuard to help detect and block scam calls in real-time.

Are deepfakes illegal?

While not all are illegal, using them for criminal purposes—such as defamation, fraud, or creating explicit content without consent—is illegal in many countries, including the UK.

How are audio deepfakes created?

Audio deepfakes are created using AI to clone a person’s voice. Scammers can use publicly available audio samples to generate convincing voice mimics used in phishing or fraud attempts.

Can deepfakes be detected?

Yes, there are tools designed to detect these fakes by analysing inconsistencies in audio or video. However, deepfake technology is improving, making detection increasingly challenging.

What should I do if I receive a deepfake call?

If you suspect that you’ve received a deepfake call, here are the steps you should take:

- Do not engage: Avoid sharing any personal information or taking action based on the call. This is because scammers often use deepfakes to manipulate people into revealing sensitive data or making financial transactions.

- Verify the caller’s identity: Try to verify the identity of the caller by using an alternative communication method, such as calling them back on a different phone number or contacting them through an email or messaging service you know is secure.

- Report the incident: If the call appears fraudulent or malicious, report it to Action Fraud. You can report online or by phone. Additionally, you can inform your service provider to block the number or investigate further.

- Use scam prevention tools: If you’re using services like Phonely’s CallGuard, this can help prevent scam calls from reaching you in the first place.

- Document the call: Record details of the interaction, such as the caller’s name, phone number, and anything unusual about the call. This information can help authorities investigate and prevent future scams.

Conclusion:

As deepfake technology advances, its potential for misuse grows, from identity theft to political disinformation and financial fraud. While deepfakes can be used creatively, the risks they pose to individuals and businesses are serious. So, staying informed and leveraging tools like Phonely’s CallGuard will help protect against deepfake scams, ensuring you stay one step ahead of fraudsters. In a digital world filled with uncertainty, vigilance and advanced protection tools are key to safeguarding your personal and professional life.

Leave a Reply